Keeping AI From Going Off The Rails

Dev Leader Weekly 117

TL; DR:

Not just “Skill Issue”

Prompting will always have ambiguity

Check out the livestream (or watch the recording) on Monday, December 1st at 7:00 PM Pacific!

Guardrails for AI Coding Agents

If you’ve been building software with AI agents for more than five minutes, you already know the pattern.

You give a clear prompt.

You give step-by-step instructions.

You provide examples.

You even add comments and warnings.

And the AI… mostly listens. Until it doesn’t.

Then suddenly your agent is deleting code out of nowhere, ignoring formatting conventions, rewriting patterns that are literally right beside the file it’s editing, and inventing its own test structure like it’s auditioning for a parallel universe.

If this sounds familiar: welcome. You’re in the club.

And just like when we collaborate with real humans, communication with AI always has ambiguity. You can be as clear as humanly possible… and still get something that isn’t exactly what you intended.

So the question becomes: How do we put strong guardrails around our AI agents so they stay aligned with our codebase, our patterns, and our expectations?

In this article, I want to walk through a few ideas. Some are simple prompting techniques. Some revolve around structure and tooling. And one of the most powerful -- especially in .NET -- is using Roslyn analyzers to enforce rules programmatically.

But first, let’s set the stage. You can get an overview from this Code Commute video, too:

Prompting Isn’t Enough (And That’s Not Your Fault)

A lot of people online love to blame bad AI output on “skill issue.” As if all of these models are perfectly obedient geniuses and you’re just bad at asking questions.

This is nonsense. You can provide:

A clean prompt

Step-by-step instructions

Clear constraints

Examples

Custom Copilot instructions

A fine-tuned agent

A structured repo with established patterns

And the model can still:

Drop indentation and invent crazy code formatting

Delete lines of code... because you weren’t using those anyway, right?

Invent design patterns, since you needed more variety in your code

Ignore test conventions. Heck, even delete those pesky tests since it deleted the code it was testing anyway.

Drift away from your codebase standards

This isn’t because you’re bad at prompting. It’s because AI -- like people -- sometimes misses context, makes assumptions, or just gets weird halfway through a task.

Yes, you can enhance your prompts to try and ensure that it stays on track. Yes, that is absolutely a piece of the puzzle, but that means we need guardrails beyond just prompting to keep things on track.

Let’s walk through some examples.

Guardrail #1: More Structure in Your Workflow

This is the most obvious one, but it’s still worth covering. Fortunately, many of the tools we’ve been leveraging are finding ways to incorporate these concepts more seamlessly -- especially ones that have a dedicated “planning” mode you can leverage.

If you want your AI agent to be effective:

Give it a TODO list

Define steps it must follow

Use checklists

Include acceptance criteria

Add examples it must mimic

Reference specific files whenever possible

A fun “meta” idea here is that if you’re not sure how to provide this information effectively to the LLM... Ask it! Ask an LLM in chat mode how you can take requirements and break them down into tasks for an AI agent to execute on. Ask it how you can structure acceptance criteria clearly and unambiguously for an AI agent.

Structure helps, but it doesn’t fully solve the problem. Sometimes you ask for A → B → C, and it gets as far as B before inventing “Z” for (seemingly) no reason.

That’s where the next guardrails come in.

Guardrail #2: Instructions Files & Custom Agents

GitHub Copilot and other AI tools now support instructions files that the model reads before doing anything else. These help set expectations and reinforce your team’s coding standards.

If you’re a Copilot user, you’re most likely familiar with the ol’ copilot-instructions.md file. But one of the annoying things was that every single tool started to have its own flavor of this... So your repository would end up with 100 similar files just to get the idea across.

We’ve fortunately moved towards Agents.md, so we can get our guidelines in a single spot -- and you can even put custom ones into subfolders for more focused scope.

We can also create custom agents for tools like Claude and GitHub Copilot. Personally, I took the C# expert agent from the Awesome Copilot repo as a starting point for my own custom agent -- especially because the majority of work I am doing is building out C# features. Much like your Agents.md file, this is one more opportunity to provide tailored instructions for your agent to leverage.

But instructions files still only work if the AI:

Reads them

Understands them

Chooses to obey them

And that doesn’t always happen. This is why we need code-backed guardrails, not just textual ones.

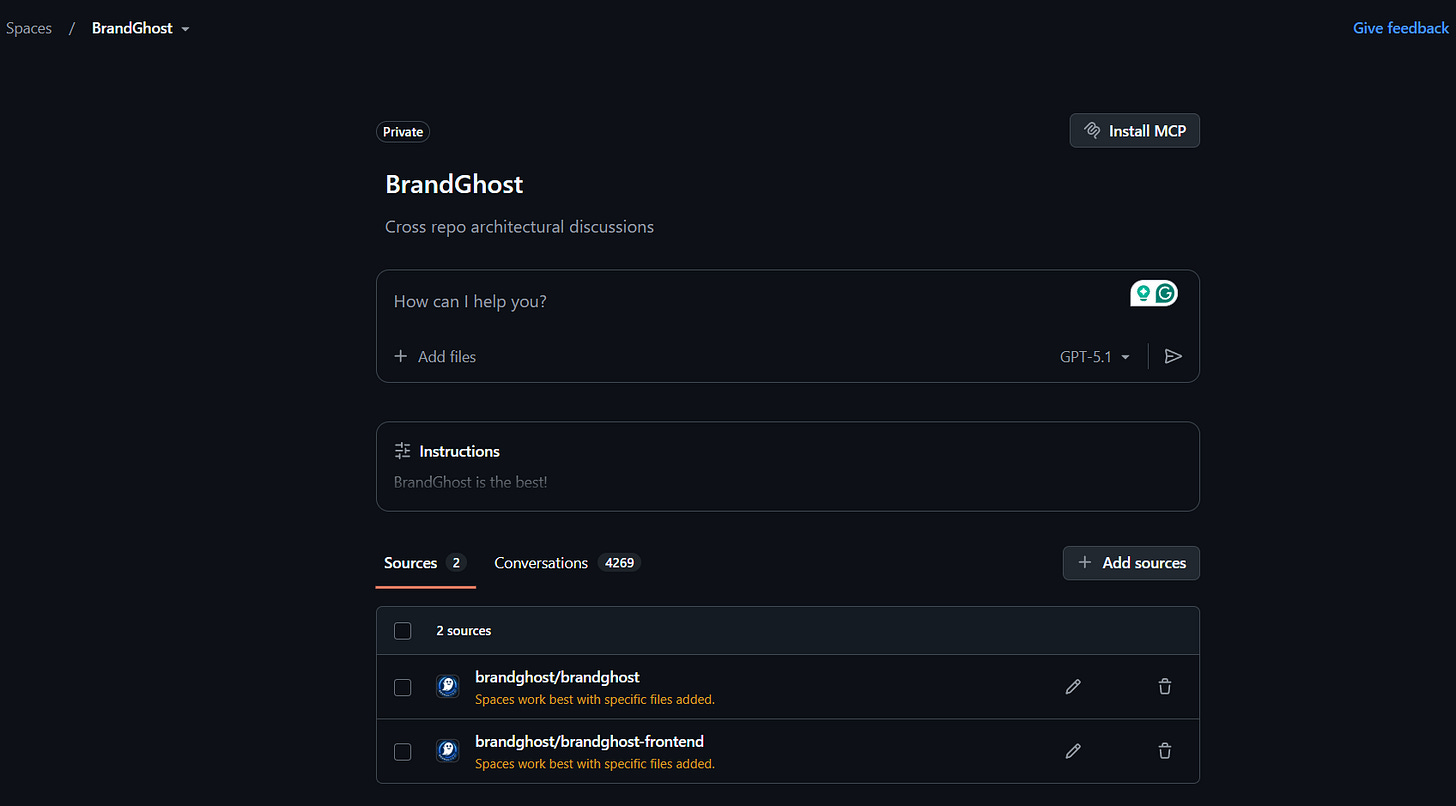

Guardrail #3: Multi-Repository Context (Spaces / Workspace Models)

Tools like GitHub Spaces, multi-repo context windows, and agent-view environments let your AI see multiple repositories at once.

This helps tremendously because the AI can:

Compare patterns

Observe consistent architecture

Understand testing setups

Pull examples directly from your codebase

But again, context is not enforcement. Context helps, but it doesn’t force compliance. I really like using this approach in conjunction with some of the other guardrails. But... How?

Since the LLM in these scenarios has full context of my codebase, I can:

Ask for help translating requirements into step-by-step actions with MUCH higher confidence because it can see my code

I can design features that span frontend and backend (in one of my examples, there is a separate repository for each)

When I work through a design or plan, I can see early on what patterns the agent is identifying or latching onto -- I can steer it away by adding more detail into the instructions that it ultimately generates

This is truly now one of the spots I spend most time when developing. I can do a LOT more upfront back-and-forth with the agent for having a higher chance of success when it comes to implementation.

But... it’s STILL not a guarantee that things stay on track. So let’s talk about the strongest guardrail of all.

Guardrail #4: Programmatic Enforcement

This is where things get fun.

If you’re in C#, you have access to Roslyn analyzers, which let you write custom static analysis rules. And these aren’t linting in the simple sense.

Analyzers can:

Inspect your syntax tree

Enforce architectural decisions

Block anti-patterns

Require specific patterns

Warn, suggest fixes, or throw errors

Integrate into your CI/CD pipeline

Run every time code compiles

If you enforce a rule through Roslyn, your AI has to comply or the build fails. Yes, potentially the AI can write rule suppressions, but maybe with some better prompting, we can reduce that even further!

If you aren’t a C# developer, not to worry. Analyzers are effectively like a linter that you might use for style, just more powerful. Your language may have something similar conceptually, so the meta point with analyzers is to programmatically catch these issues.

A Simple (Conceptual) Analyzer Example

Let’s say you want all tests to resolve the System Under Test (SUT) through dependency injection rather than manually constructing it.

I know that for one of my particular projects, I have had a lot of success with types being resolved from the dependency container to help:

Reduce over-mocking things

Reduce refactoring overhead if constructor params / dependencies change

Keeping things closer to how they will be in production code

We could, in theory, write an analyzer that flags any direct instantiation:

// ❌ Not allowed

var service = new UserService(mockRepo.Object);

// ✔ Must use DI

var service = Resolve<UserService>();

A Roslyn analyzer for this can check for any new ClassName(...) inside test projects and block it.

Here’s a simplified skeleton of how that might look:

[DiagnosticAnalyzer(LanguageNames.CSharp)]

public class NoDirectInstantiationInTestsAnalyzer : DiagnosticAnalyzer

{

private static readonly DiagnosticDescriptor Rule = new(

id: “DEVL001”,

title: “Do not instantiate services directly in tests”,

messageFormat: “Use the test DI container to resolve ‘{0}’ instead of directly instantiating it.”,

category: “Testing”,

defaultSeverity: DiagnosticSeverity.Error,

isEnabledByDefault: true

);

public override ImmutableArray<DiagnosticDescriptor> SupportedDiagnostics => ImmutableArray.Create(Rule);

public override void Initialize(AnalysisContext context)

{

context.ConfigureGeneratedCodeAnalysis(GeneratedCodeAnalysisFlags.None);

context.EnableConcurrentExecution();

context.RegisterSyntaxNodeAction(AnalyzeObjectCreation, SyntaxKind.ObjectCreationExpression);

}

private void AnalyzeObjectCreation(SyntaxNodeAnalysisContext context)

{

var objectCreation = (ObjectCreationExpressionSyntax)context.Node;

// Very naive example: flag anything created via “new” in a test project

if (context.SemanticModel.SyntaxTree.FilePath.Contains(”Tests”))

{

var typeName = objectCreation.Type.ToString();

var diagnostic = Diagnostic.Create(Rule, objectCreation.GetLocation(), typeName);

context.ReportDiagnostic(diagnostic);

}

}

}

Is this production-ready? No. You’d probably be upset pretty quickly if you needed to instantiate a new List<T> and this thing was preventing you.

Does it illustrate the idea clearly? Hopefully! We can write rules on top of the basic rules of the language.

An analyzer like this helps to create absolute guardrails:

AI tries to manually instantiate something → build breaks

AI invents a pattern that isn’t used anywhere → build breaks

AI formats tests wrong → build breaks

AI ignores a core rule → build breaks

AI stops being creative where it shouldn’t be. It can only operate inside the boundaries you set.

When I review the work of my agents, I see if there are anti-patterns they are still using, or expected patterns that they are missing. I then use the examples from (generally, GitHub Copilot) to go back to my Spaces in GitHub and talk to the LLM about different analyzer ideas we could have to enforce the expected patterns.

Loop. Rinse. Repeat.

Putting It All Together

The truth (no matter what any AI Bro says): AI isn’t perfect, prompt engineering isn’t magic, and agents will sometimes drift no matter what you write.

... People do this too.

Guardrails don’t eliminate ambiguity. They reduce the impact of that ambiguity.

... This applies to people, too.

You can guide your AI with:

Clear instructions

Good examples

Strong codebase conventions

Multi-repo context

Custom agents

Workspace instructions

But when you add programmatic guardrails like Roslyn analyzers? Now you’re operating in a world where AI must follow your rules.

And that makes your AI coding workflow:

More predictable

More consistent

More aligned with your architecture

Less frustrating

Dramatically more scalable

If you want AI to code like someone who understands your standards, teach it through code -- not just text.

Join me and other software engineers in the private Discord community!

Remember to check out my courses, including this awesome discounted bundle for C# developers:

As always, thanks so much for your support! I hope you enjoyed this issue, and I’ll see you next week.

Nick “Dev Leader” Cosentino

social@devleader.ca

Socials:

– Blog

– Dev Leader YouTube

– Follow on LinkedIn

– Dev Leader Instagram

P.S. If you enjoyed this newsletter, consider sharing it with your fellow developers!